Calvin Wankhede / Android Authority

TL;DR

- ChatGPT’s new Advanced Voice mode has been delayed by at least one month.

- OpenAI is currently working on improving the model’s safety and reliability.

- The feature will be available to select users as a limited alpha soon, with a full release slated for the end of 2024.

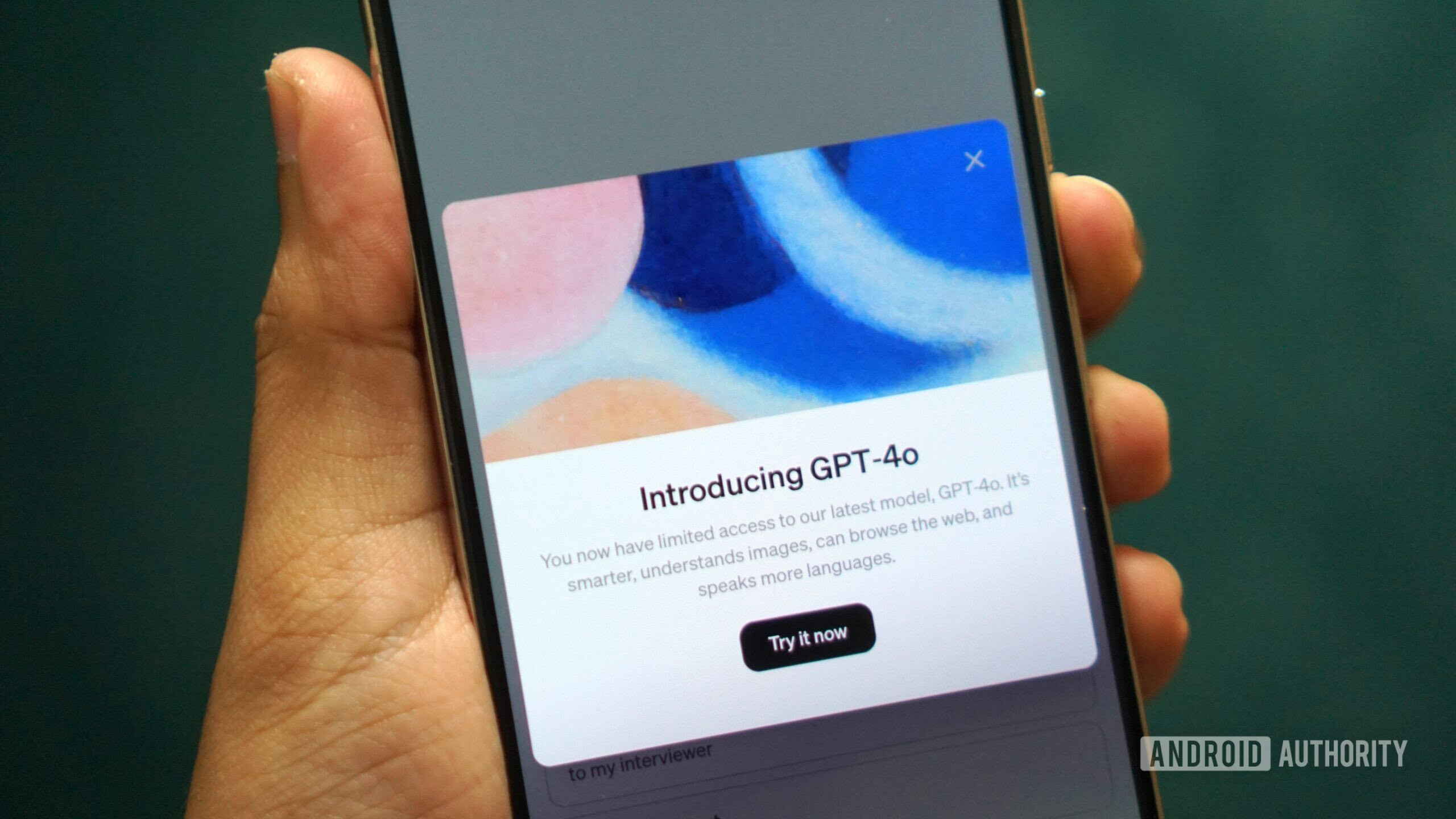

Last month, I wrote about how one of GPT-4o’s headlining features wouldn’t see the light of day for a few more weeks. The feature in question was an advanced voice conversation mode built into the ChatGPT smartphone app, with capabilities far beyond any personal assistant we’ve seen so far. Fast forward to today, however, and OpenAI has announced that the feature won’t be ready for at least a month longer.

In a recent tweet, OpenAI said that it had originally planned to start rolling out the feature to select users in late June. However, the company has decided that it needs another month to focus on safety. Put bluntly and in OpenAI’s own words, the company is “improving the model’s ability to detect and refuse certain content.”

OpenAI also cited infrastructure-related challenges as a reason for the delay. That isn’t surprising given that ChatGPT has suffered numerous outages within the past month alone. Even before that, I’ve personally noticed hitches and artifacts while using the regular voice conversation mode. GPT-4o could be more computationally intensive, especially as OpenAI promises it can deliver responses to audio inputs in as little as 232 milliseconds.

But even though OpenAI said that it will only open up access to the new voice mode next month, a small number of users have reportedly already started seeing an in-app invitation to test the feature. The page describes “Advanced Voice” as a new feature in “limited alpha.” However, accepting the invitation doesn’t seem to unlock access to the new voice mode so it might be a case of a pop-up appearing earlier than intended.

OpenAI’s tweet, meanwhile, suggests that alpha access will open up next month to a small group of users with general availability slated for fall. However, the company warns that the release timeline will depend on meeting internal safety and reliability standards.

What can ChatGPT’s Advanced Voice mode do?

We got our first glimpse of GPT-4o’s new voice mode at OpenAI’s Spring Update event in early May. The company released a series of demos in the following weeks, showcasing ChatGPT not just engaging in rapid, back-and-forth discussion but also capable of modulating its voice to mimic sarcasm, laughter, and more. OpenAI has also claimed that the model will be able to detect emotion in the user’s voice and react accordingly, a first for any chatbot.

A handful of sample videos also combined GPT-4o’s voice and visual capabilities, allowing the chatbot to answer questions about real-life situations. In one such demo, Khan Academy founder Sal Khan showcased how the feature could be used as a teaching tool for on-screen math problems.

According to OpenAI’s tweet, the new video and screen-sharing features will debut separately from the voice mode. However, all of these advanced capabilities will be locked behind the company’s paid ChatGPT Plus subscription. Until now, the $20 per month subscription only unlocked text-based access to the GPT-4o model as well as supplementary features like custom GPTs.

Got a tip? Talk to us! Email our staff at [email protected]. You can stay anonymous or get credit for the info, it's your choice.

English (US) ·

English (US) ·