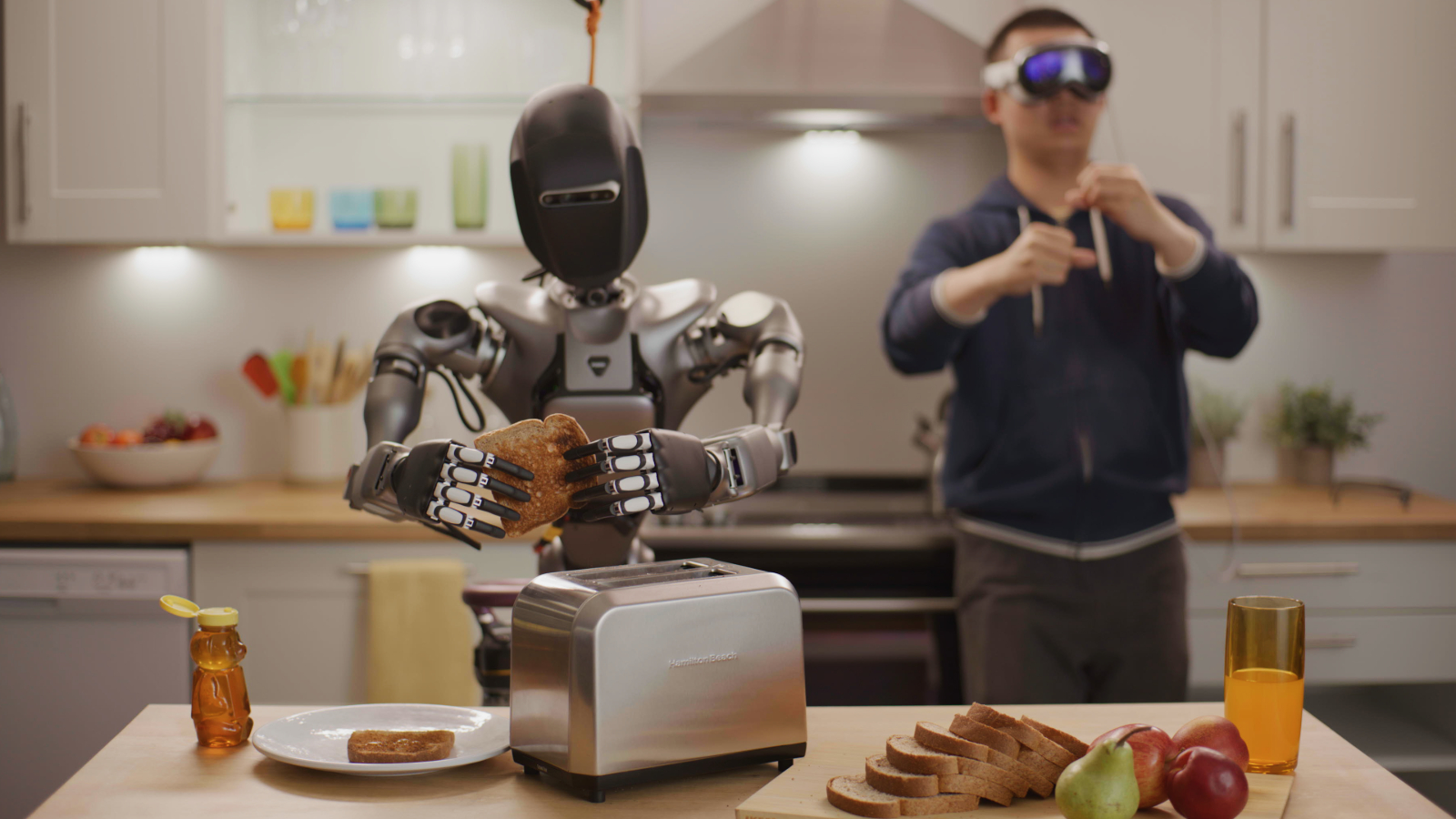

Apple may not have much use for NVIDIA, but that doesn’t mean NVIDIA has no use for Apple. Specifically, the Apple Vision Pro headset it uses to develop its humanoid robot. See how below:

The video is part of a campaign to promote NVIDIA’s work around robotics.

In the press release, Apple Vision Pro appears twice as an instrument for controlling NVIDIA’s robots in regards to the MimicGen NIM microservice:

Training foundation models for humanoid robots requires an incredible amount of data. One way of capturing human demonstration data is using teleoperation, but this is becoming an increasingly expensive and lengthy process.

An NVIDIA AI- and Omniverse-enabled teleoperation reference workflow, demonstrated at the SIGGRAPH computer graphics conference, allows researchers and AI developers to generate massive amounts of synthetic motion and perception data from a minimal amount of remotely captured human demonstrations.

First, developers use Apple Vision Pro to capture a small number of teleoperated demonstrations. Then, they simulate the recordings in NVIDIA Isaac Sim and use the MimicGen NIM microservice to generate synthetic datasets from the recordings.

The developers train the Project GR00T humanoid foundation model with real and synthetic data, enabling developers to save time and reduce costs. They then use the Robocasa NIM microservice in Isaac Lab, a framework for robot learning, to generate experiences to retrain the robot model. Throughout the workflow, NVIDIA OSMO seamlessly assigns computing jobs to different resources, saving the developers weeks of administrative tasks.

Read the full release here for the deep dive.

FTC: We use income earning auto affiliate links. More.

4 months ago

67

4 months ago

67

English (US) ·

English (US) ·