Edgar Cervantes / Android Authority

TL;DR

- Google has announced a new experimental YouTube notes feature.

- This tool allows the public to add context to YouTube videos.

- So far, it is only available on mobile in the US in English and only for a “limited number of eligible contributors.”

A good presenter in a well-produced YouTube video will tell you information clearly and confidently. However, sometimes that information isn’t entirely accurate, either because the presenter has made an honest mistake or, in some cases, is purposely trying to mislead you. When this happens, there currently is little recourse for someone watching the video to do anything about this.

Thanks to a new YouTube notes experiment, that could change. Starting today, in the United States, for “a limited number of eligible contributors” who speak English, YouTube will allow notes to be added to public videos. The way Google describes it in its blog post, the feature seems to be quite similar to Community Notes on X (formerly Twitter). Essentially, it allows viewers to add context to a specific claim in a video so other viewers can be more aware of the facts surrounding it.

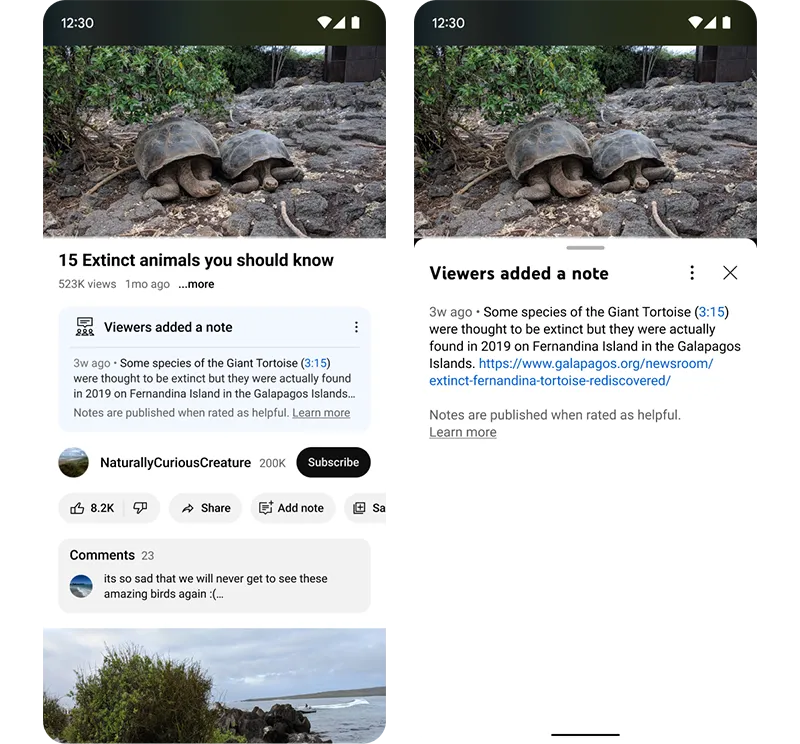

You can see a mock example of this below:

In the example, the video presents the information that the Giant Tortoise is an extinct animal. However, this is inaccurate, as Giant Tortoises were found alive in the Galapagos Islands in 2019. This is likely an honest mistake by the video creator because, before that discovery, it was widely accepted that the Giant Tortoise was extinct. In this mock case, viewers added a public note to the video explaining this inaccuracy and providing a link to proof of that claim.

Ideally, people who watch this video will see this note and understand that the Giant Tortoise isn’t actually extinct, despite the video’s claims.

YouTube notes: How does it work?

First, an eligible user must submit a note related to a particular video claim. This note will go to a third-party evaluator, who, Google points out, are the same people who provide feedback on YouTube’s search results and recommendations. These evaluators will then decide whether a note should become public.

Once a note is public, other viewers can rate the note in one of three ways: “helpful,” “somewhat helpful,” or “unhelpful.” They’ll also be able to provide their own context and sources for why they chose their rating.

At this point, an algorithm takes over. It decides what notes to publish. This algorithm is adaptive, so if a note is rated as “unhelpful” for a time but then many people start rating it as “helpful” because new information surrounding it has come to light, the algorithm will respond to this appropriately.

What does this mean for information on YouTube?

Google makes it clear that this is a very limited experiment. However, the ramifications for YouTube notes are actually quite significant. Misinformation proliferates on YouTube at an astounding rate, but some viewers will still watch videos and think whatever the presenter says is completely factual. This can result in the spread of that misinformation, which could have devastating results on society.

Time will tell if this experimental notes feature actually helps fix this problem. For what it’s worth, Community Notes on X have done a lot of good when it comes to pointing out unfactual claims or statements that need more context to be fully understood.

However, YouTube notes could also be abused in the same way the platform itself is abused by bad actors wishing to spread falsities. It will be interesting to see how Google’s third-party evaluators and algorithms combat this.

In the meantime, the general public should start seeing notes appear on some videos “in the coming weeks and months.”

Got a tip? Talk to us! Email our staff at [email protected]. You can stay anonymous or get credit for the info, it's your choice.

English (US) ·

English (US) ·