It’s been a few months since I first picked up the Rabbit R1 to see if it could help me in everyday life. At launch, the answer was a firm no — the companion only had a few integrations, and even those only worked about half the time. It was so bad, in fact, that I relegated it to my closet, essentially ignoring it until the day Rabbit started making good on its initial promises. Now, that day might be here. Rabbit’s trainable LAM Playground is finally live, and I decided to give it a spin.

Can’t CAPTCHA me

Ryan Haines / Android Authority

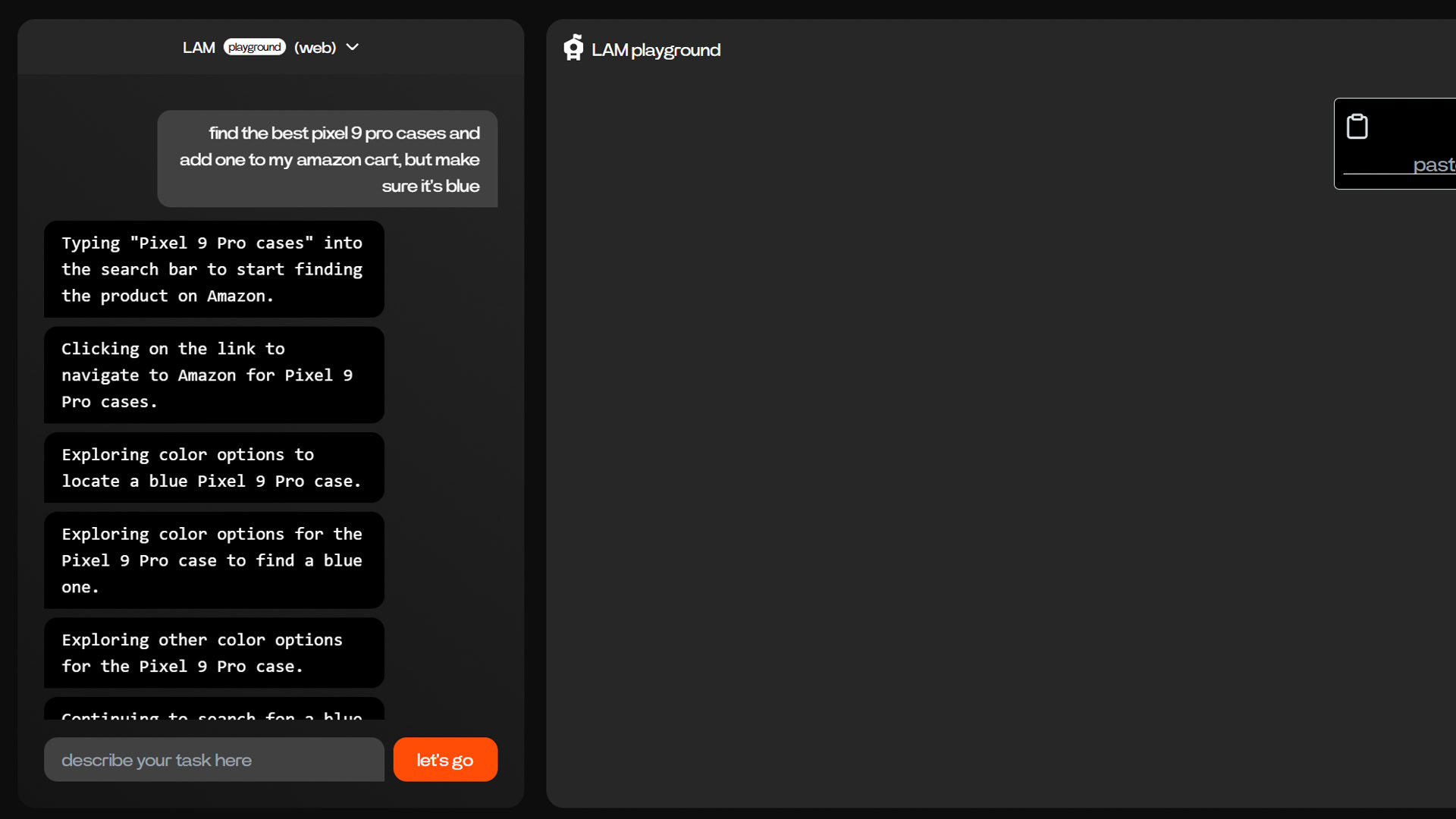

Thankfully, when you open the LAM Playground for the first time, Rabbit is kind enough to offer you a few prompts as suggestions. After all, it’s one thing to say that you can train your AI model, but it’s another thing to know where to start. So, I took Rabbit’s advice and began looking for a new phone case. I’m currently reviewing the iPhone 16 Pro, but I never got around to grabbing a case compatible with the Camera Control, so I asked Rabbit to “find me the best clear iPhone 16 Pro case and add it to my Amazon cart.”

At first, it got off to a flying start — Rabbit quickly navigated to Google and searched for exactly what I wanted. When it tried to select the case — Spigen’s Ultra Hybrid, if you were curious — Amazon hit it with a text-based CAPTCHA, a test that Rabbit couldn’t figure out how to pass. I was able to lend a hand and type in the text myself, but it didn’t matter. Amazon had figured out that I was at least half-robot, and I was blocked.

The LAM Playground can find what you want, but it still can't find a way past CAPTCHA.

Not completely put off by my first experience, I decided to try again. This time, I looked for the “best taco restaurants in Baltimore, Maryland, and whether or not I need a reservation.” Once again, the rabbits got to work, heading to Google and looking for my search term. This time, the LAM Playground navigated me to Tripadvisor, where it faced a different kind of CAPTCHA — one where you must line up a puzzle piece on an image. Once again, I lent it a hand, but once again, I was blocked.

As they say, the third time is the charm, so I went back to looking for a phone case. This time, I wanted some protection for my Pixel 9 Pro, but I wanted a specific color. I rolled with “find the best Pixel 9 Pro cases and add one to my Amazon cart, but make sure it’s blue.” To its credit, the LAM Playground successfully found some Pixel 9 Pro cases and made it to Amazon without a CAPTCHA test, but then a new problem arose — Rabbit is still incredibly stubborn. The LAM Playground simply grabbed the top case from Amazon’s list and began to look for a blue color option. Unfortunately for the Rabbit, there wasn’t one. Undeterred, it switched between the same five color options for the same case for about 20 minutes, praying that one of them would magically turn blue. None did. Eventually, it timed out on the request, as did my patience.

That’s not how you play the (Wikipedia) game

Ryan Haines / Android Authority

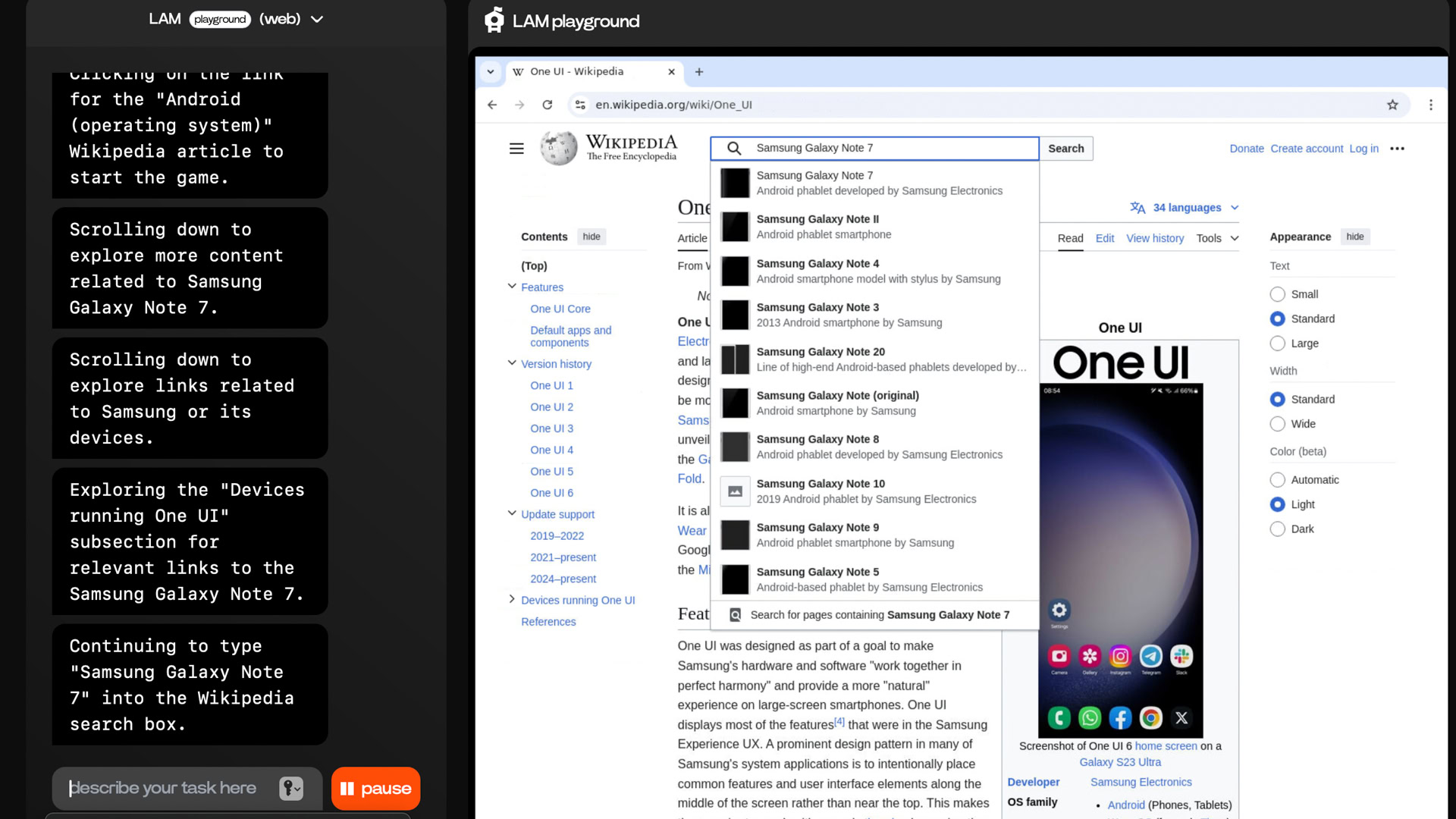

Another prompt Rabbit suggested when I fired up the LAM Playground was a quick trip through the Wikipedia game. It suggested navigating from “rabbit” to “artificial intelligence,” but I decided to swap in my own terms. I requested that the rabbits take me from the “Android” page to the “Samsung Galaxy Note 7” page — a bit of a jump, but certainly nothing that should take more than a few clicks.

Once again, everything started smoothly. The LAM Playground easily found its way to the Android page on Wikipedia. It then began to scroll slowly while looking for a link to Samsung in general. The model selected a link to One UI, which launched after the Galaxy Note 7, and suddenly found itself stumped. It scrolled up and down, apparently not seeing anything it was happy with. So, instead of choosing another link and taking the long way around, the LAM Playground went to Wikipedia’s search bar and typed “Samsung Galaxy Note 7.” It clicked on the link and then told me it had won the Wikipedia game.

I certainly didn't expect the LAM Playground to cheat at the Wikipedia game.

I had to inform the LAM Playground that searching for the final term was not, in fact, how you won the Wikipedia game, after which point it tried to do me one better. The rabbits opened Wikipedia’s source editor and headed to the “Terminology” section as if to change a live Wikipedia page to include the term I requested, which is entirely outside of the rules of the game — not to mention the last thing I expected.

And now, it’s time for me to get off this ride. Rabbit’s LAM Playground still feels like a rough and unfinished place, and it’s still reliant on me for far too much of the process. I don’t mind entering the commands to see how the rabbits respond, but letting it cheat through the Wikipedia game and relying on human input to pass a constant stream of CAPTCHA tests seems a little bit different from the smooth, automated experience that Rabbit pitched me on.

But hey, if you’re determined to try the LAM Playground for yourself, you can grab a Rabbit R1 down below.

Rabbit R1

Lightning-fast responses • Eye-catching design • Approachable price

English (US) ·

English (US) ·