Calvin Wankhede / Android Authority

With competition from Google’s Gemini and Anthropic’s Claude AI models heating up, OpenAI has found itself in the midst of an identity crisis. Once the undisputed leader in large language models (LLMs), it’s now scrambling to maintain its position at the top. New models like ChatGPT-4o and 4o mini have stemmed the exodus to competing AI chatbots, but OpenAI is under constant pressure to keep innovating. The company has done just that with o1-preview, a new AI model series that excels at complex reasoning and emulating human thought. How good is it? I put it to the test to find out.

What is the new o1-preview ChatGPT model all about?

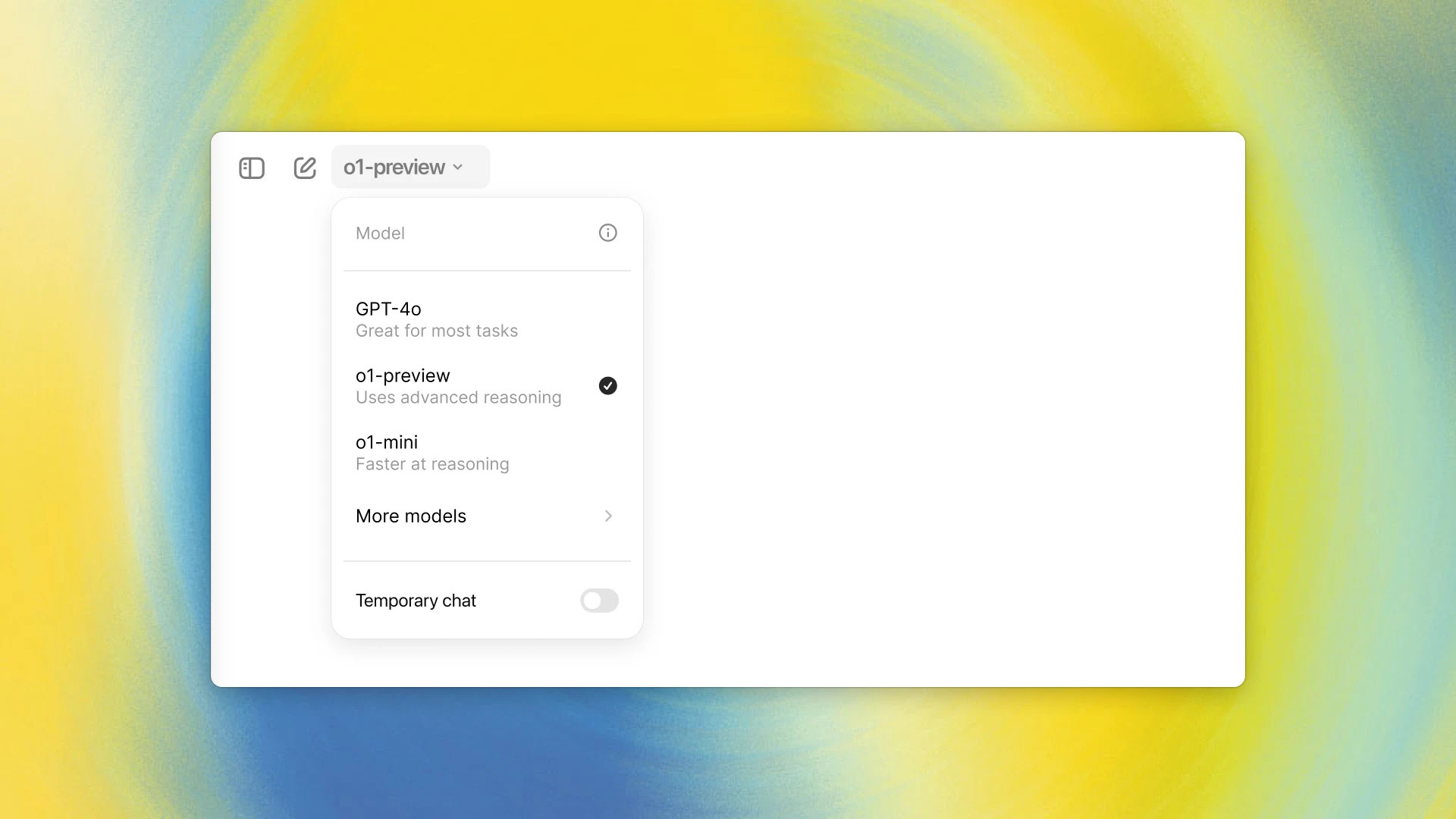

OpenAI’s o1-preview and o1-mini are the latest models available within ChatGPT, designed for complex reasoning tasks and problem-solving. As their names suggest, these models are not generational successors to GPT-4 or any of OpenAI’s previous language models. In fact, GPT-4o will not only continue to exist but also remain the default model for all chats.

Unlike prior models that responded to your prompts as quickly as possible, the o1 series has been designed to spend more time thinking through problems, similar to a human’s thought process. This naturally ensures greater accuracy in prompts related to math and coding, but it is also useful for real-world questions and scenarios, as I’ll showcase in my testing below.

We first heard about the o1 model series in July, when Reuters interviewed researchers familiar with a secretive internal project codenamed Strawberry. The goal of the project was to develop an AI capable of performing “deep research,” in line with the company’s mission to achieve artificial general intelligence (AGI). The latter refers to an AI system that’s intelligent enough to outthink humans across multiple subjects. The Strawberry project was rumored to arrive ahead of GPT-5, which is still being developed.

o1 is OpenAI's latest model family that can break down problems and reason like a human.

The new o1 series is still a long way off from achieving true AGI — OpenAI CEO Sam Altman admitted that “o1 is still flawed, still limited, and it still seems more impressive on first use than it does after you spend more time with it.” However, it’s a big leap forward from the earliest ChatGPT release that many believed would never succeed at solving math problems or logical exercises.

While o1-preview is the newest flagship model, it’s also accompanied by a much leaner and faster o1-mini. OpenAI found that the series excels at coding, so it also released a second model that can accurately generate and debug code. Aimed mostly at developers, o1-mini is 80% cheaper than o1-preview.

o1-preview vs GPT-4o tested: Is it really better?

If you’re skeptical that o1-preview is leagues ahead of prior models, there’s good news — the chatbot does pause to think, sometimes upwards of a minute, before responding. It breaks down complex problems into chunks, which helps it correct errors

However, there’s also bad news — the o1 series is not universally better across the board. In particular, it cannot search the internet for new information like the older GPT-4o model nor can it perform advanced data analysis. You also cannot upload files and images, meaning you’ll have to frontload each prompt with as much information and context as possible. OpenAI even admits that many ChatGPT users will want to stick to GPT-4o for the time being.

Setting aside those caveats, though, how does it perform? To find out, I posed a handful of confusing and complex questions to both of OpenAI’s best models. Here’s how o1-preview fared vs GPT-4o.

Prompt 1: How many legs do I have?

Starting with an easy one, I asked ChatGPT how many legs I’d have if I had 4 cows, 3 dogs, 2 cats. The answer is obviously two, which GPT-4o put forth but only after saying I’d have 36 animal legs. By contrast, I watched the o1-preview model “think” for five seconds before correctly (and confidently) saying I’d have two legs. It also acknowledged that the question was a riddle.

I also posed the same question to OpenAI’s smaller GPT-4o mini model and it failed miserably. It simply said I’d have 38 legs, adding mine to the animals’ count.

Prompt 2: Investment return calculation, while accounting for currency depreciation

Since simple prompts only require a few seconds of thinking, I decided to take things up a notch. In this prompt, I asked ChatGPT to find the better investment between two assets with differing returns and risks. The chatbot took 11 seconds to think before it responded this time. Once again, it delivered the correct answer while explaining each step.

Interestingly, GPT-4o also arrived at the same conclusion but it did not compute the figures on its own. Instead, it generated the Python code necessary to perform the calculations and executed it via ChatGPT’s advanced data analysis feature. So while the output is the same, the complexity is higher. Coding as a workaround also has the potential to fail quite spectacularly, as I would soon find out.

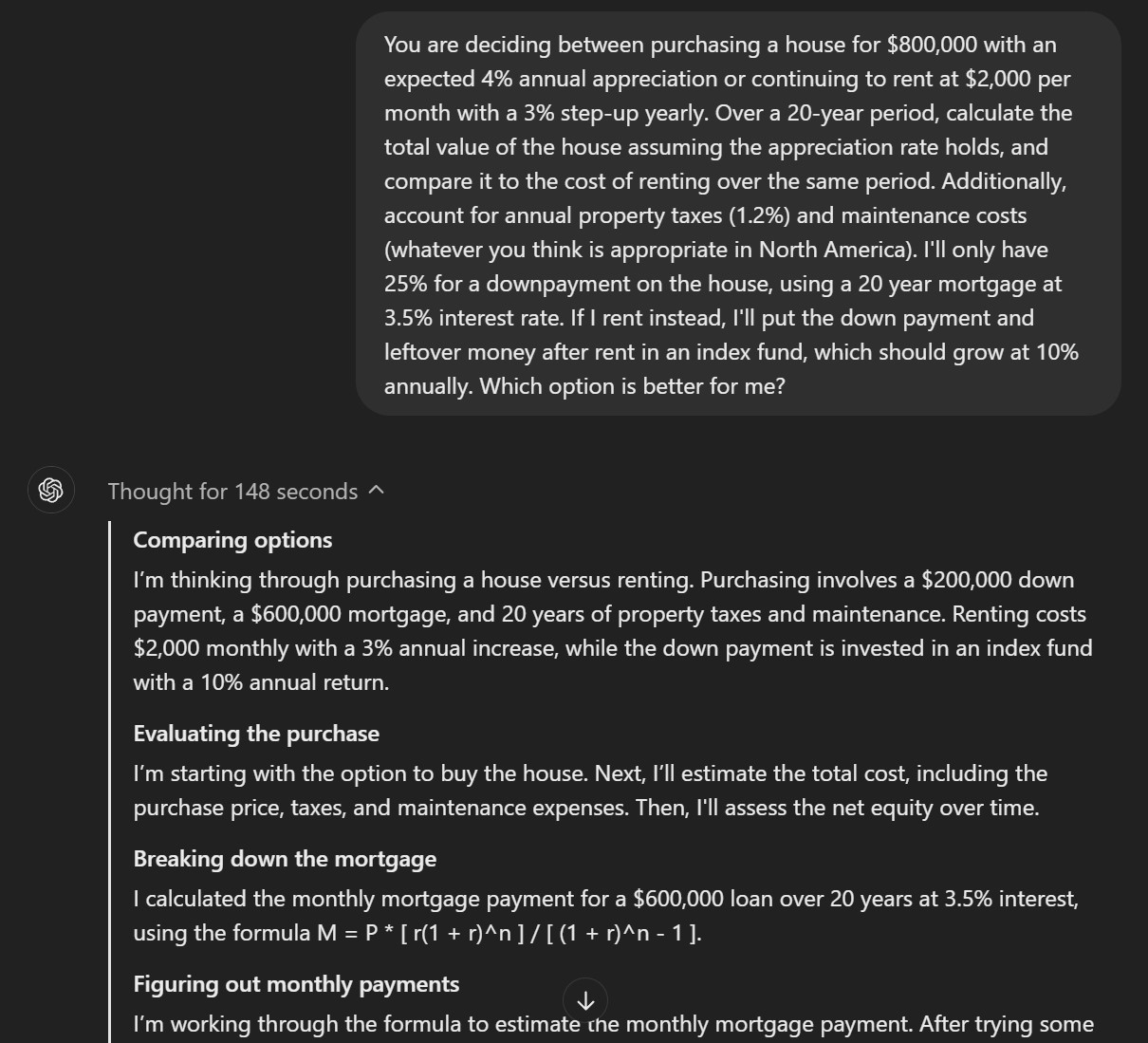

Prompt 3: Which is better, buying a house or renting?

If you hang around financially savvy folks, you’ll know that renting vs buying a house is a super divisive topic that involves a lot of variables, both financial and otherwise. Luckily, we can ask ChatGPT to do the math for us — the o1-preview model put 37 seconds’ worth of thought into this question and broke it down into 12 different steps.

I provided several figures, including my down payment amount, interest rate, expected return on investment if I rented instead, and more. This made the question a lot more complicated — ChatGPT had to first compute the cost of an $800,000 home with a $200,000 down payment. The remaining amount would be financed with a 20-year mortgage at 3.5% interest. If I rented instead, I’d be able to invest the entire $200,000 in an index fund and save any extra income after paying off the rent too.

The o1-preview model responded with a 1,000-word breakdown of the problem, concluding that my net worth would be higher by approximately $716,620 after 20 years if I rented instead of buying a home.

OpenAI's prior GPT-4o model can't keep up with o1-preview in advanced reasoning tasks.

Feeding the same prompt to GPT-4o yielded a much more disappointing outcome. The model tried to generate and run Python code to solve this problem, but failed twice before succeeding on the third try. Even then, it responded incorrectly and suggested I’d save money by buying a home instead. It only admitted fault when I pointed out a discrepancy in its calculations.

Since there are a lot more variables that can be involved, I also asked o1-preview to consider factors like property appreciation, maintenance costs, and taxes if I bought a home as well as a potential 3% increase in rent payable every year. This time, it took 142 seconds to think before responding with a plausible conclusion, which I think is very impressive.

How to use ChatGPT’s o1-preview and o1-mini models

As you may have guessed, the o1 model series requires copious amounts of computational power. And given that ChatGPT itself has been rumored to be unprofitable since its release in 2022, it’s not surprising that OpenAI has locked o1-preview behind a paywall. In other words, you will need a ChatGPT Plus subscription to select the latest model from the dropdown menu pictured above.

In fact, the model is so expensive that OpenAI has also placed a hard cap of 50 messages per week on top of the $20 per month paywall. Once you exhaust this quota, your only option is to wait or pay for a second ChatGPT Plus account. OpenAI has imposed such rate limits in the past, especially around the time GPT-4 was first introduced, but this instance is the most aggressive one yet.

Luckily, the vast majority of ChatGPT prompts do not benefit from o1’s thinking capabilities. And if you are a programmer, the o1-mini model within ChatGPT is also rolling out to the free plan in a limited capacity.

No, you need to pay for a ChatGPT Plus subscription to use the o1-preview model. However, the o1-mini model is available on the free tier in a limited capacity.

All in all, ChatGPT’s new o1-preview model is very impressive and worth a look if you have math and programming questions. It might not be the best choice for most tasks, or even the vast majority of tasks, but it’s the closest we have to emulating human reasoning and thought. However, the vast majority of users won’t benefit from o1-preview’s improved logical reasoning skills or math capabilities so I cannot recommend switching to it full time. The weekly response limit and lacking web browsing support also mean I’ll continue using GPT-4o going forward. And if you only use ChatGPT a few times every day, you can easily get by with a free account.

Perplexity’s Pro Search feature also implemented multi-step reasoning a few months ago and it too delivered impressive results in my testing. If you would like a peek at chain-of-thought AI reasoning without paying for it, I’d recommend trying it out since you get five Perplexity Pro searches every few hours on the free tier. I haven’t tested it against OpenAI’s o1-preview head-to-head yet, but it’s clear that competition in the AI space has forced ChatGPT to evolve and I can’t wait to see where it is headed next.

English (US) ·

English (US) ·