Edgar Cervantes / Android Authority

TL;DR

- Google has introduced Gemini Nano support on the latest Chrome Canary builds.

- The feature is locked behind two Chrome flags and requires a manual download of the language model.

- Gemini Nano runs entirely offline, with sub-one-second response times for simple queries on modern computers.

Google’s Gemini Nano language model is small enough to fit and run entirely on a Pixel 8, but we haven’t seen many use cases for it yet. The model is only used for features like Gboard’s Smart Reply or AI-generated summaries in the Recorder app.

Luckily, Google seems to have grander ambitions on the desktop side as it has now started testing a Gemini Nano integration within Chrome. This means you can converse with a modern large language model entirely within a web browser — even if you’re offline!

Gemini Nano for Chrome was announced just last month, with Google promising to open up access to developers for testing soon. The feature was then spotted in version 127 of Chrome Canary a few weeks ago and enterprising developers have already created web apps that showcase the local model’s capabilities. One such demo comes courtesy of Twitter/X user Morten Just, who has demonstrated how quickly Gemini Nano can respond.

The video shows Gemini Nano responding in real-time, with latency figures ranging in the low hundreds of milliseconds. However, the developer admitted to running the demo on an Apple Silicon M3 Max-powered Mac, which is quite a bit more performant than the average desktop. Still, we’re talking about receiving a reply faster than the average human response time so even slower hardware should be capable of handling Gemini Nano.

Case in point: I installed Chrome Canary on my desktop that’s equipped with an AMD Ryzen 5600X CPU and an Nvidia RTX 3060 Ti GPU. These are mid-range PC specs but enough to run larger models like Meta’s Llama 3.

How to enable Gemini Nano in Chrome: My experience

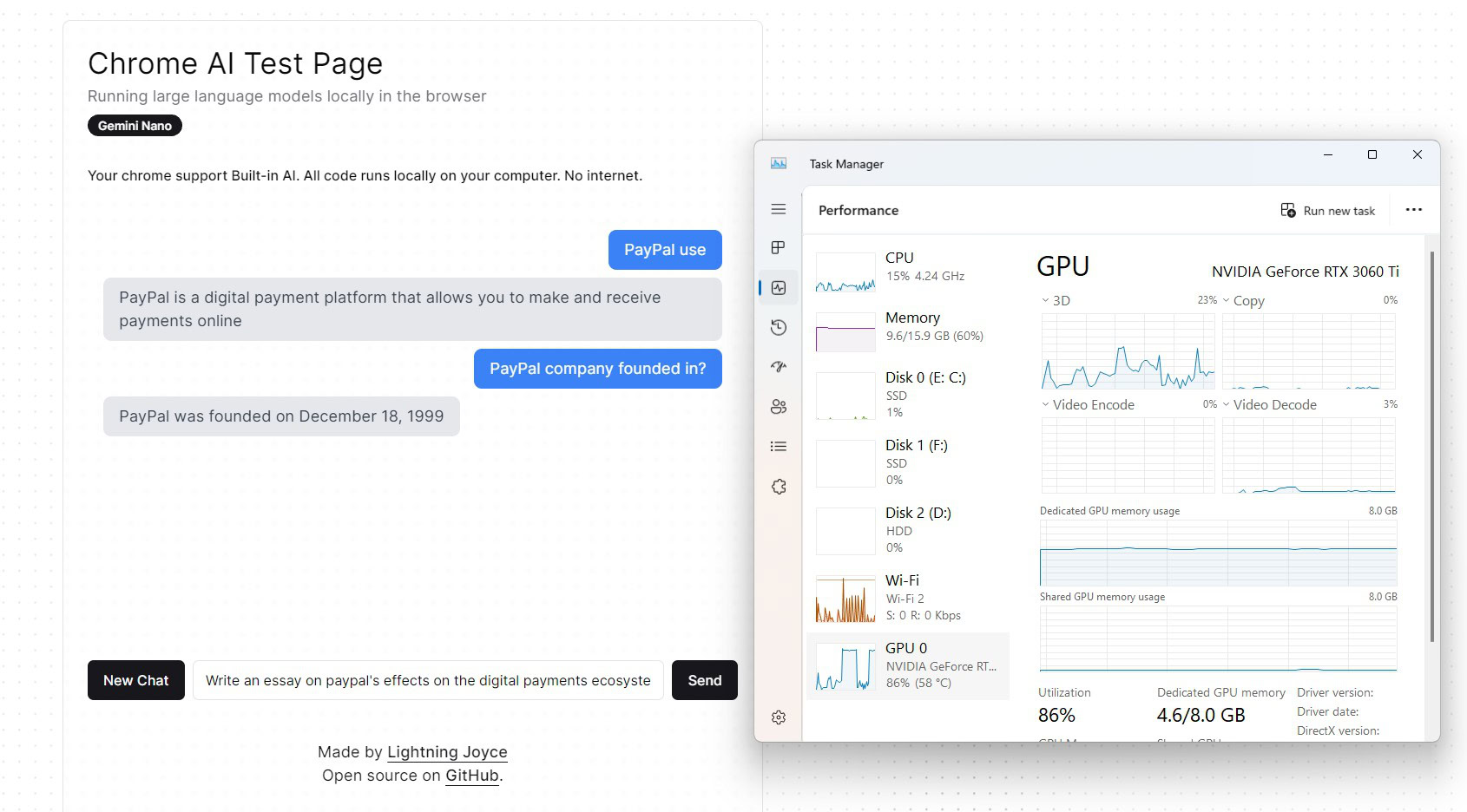

After enabling a few feature flags within Chrome Canary, I could see persistent internet traffic indicating that the browser had started downloading the model. Google doesn’t offer an interface to chat with Gemini Nano yet, but Twitter user Lightning Joyce has developed an open-source web app that you can use in the meantime. It looks like any other chatbot on the surface, only stripped back to the bare essentials. Simply load the page, disconnect your computer from the internet, and you can test Gemini Nano’s offline performance.

With the prep out of the way, how did the model perform on my system? Shockingly well and nearly on par with the demo video above. The speed isn’t groundbreaking by itself, though, as my hardware could output 50 tokens per second (roughly equivalent to 50 words per second) while processing responses via Llama 3. Instead, the key point here is that Gemini Nano can be used as a makeshift chatbot and will easily run on a wider range of hardware due to its smaller size.

Calvin Wankhede / Android Authority

However, I noticed that Gemini Nano would fail to respond whenever I entered an open-ended prompt like “Write an essay on…” or “History of Android.” Whenever this happened, my computer’s GPU usage would spike — up to 90% load for a handful of seconds before settling back down. This could be a bug in the web app, but it’s worth remembering that Gemini Nano in Chrome is an experimental feature after all. The model could handle single-paragraph responses just fine.

The good news is that Gemini Nano will only take up 2GB of your GPU’s video memory (or RAM if your computer doesn’t have dedicated graphics hardware). And even with the other caveats above, Gemini Nano remains impressively quick and usable. Not to mention, having it run entirely within Chrome could make it much more accessible than any other offline language model we’ve seen.

If you’d like to use Gemini Nano on your own machine, follow these steps:

- Install Chrome Canary version 128 or newer.

- Navigate to chrome://flags

- Enable the prompt-api-for-gemini-nano and optimization-guide-on-device-model flags.

- Navigate to chrome://components, look for “Optimization Guide On Device Model”

- Wait for the model download to complete — this can take a while depending on your internet speed. Gemini Nano is roughly 2GB in size.

- Navigate to a web app like chromeai.pages.dev, disconnect your internet connection, and send your first message. You should see a response nearly instantly.

Got a tip? Talk to us! Email our staff at [email protected]. You can stay anonymous or get credit for the info, it's your choice.

English (US) ·

English (US) ·