A Redditor has discovered built-in Apple Intelligence prompts inside the macOS beta, in which Apple tells the Smart Reply feature not to hallucinate.

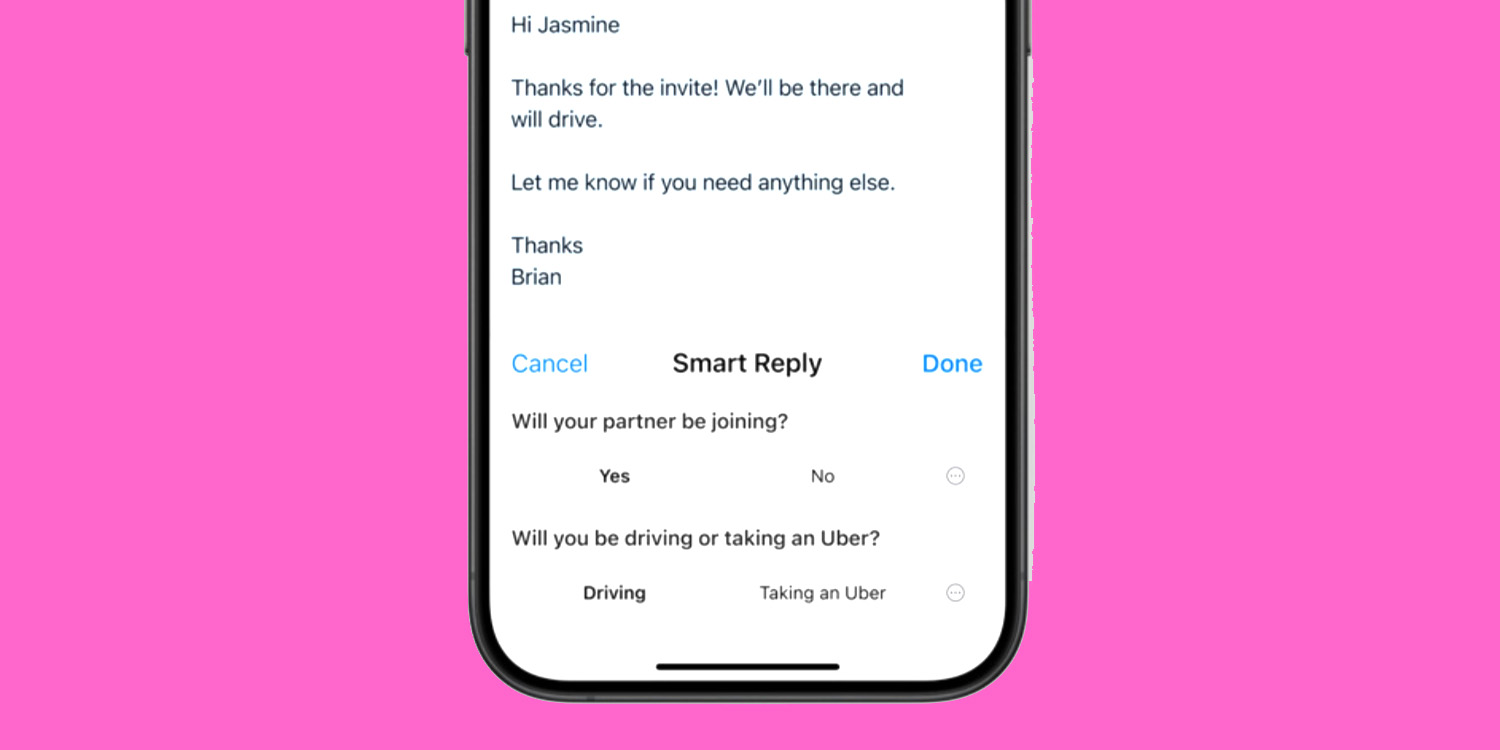

Smart Reply helps you respond to emails and messages by checking the questions asked, prompting you for the answers, and then formulating a reply …

Smart Reply

Here’s how Apple describes the feature:

Use a Smart Reply in Mail to quickly draft an email response with all the right details. Apple Intelligence can identify questions you were asked in an email and offer relevant selections to include in your response. With a few taps, you’re ready to send a reply with key questions answered.

Built-in Apple Intelligence prompts

Generative AI systems are told what to do by text known as prompts. Some of these prompts are provided by users, while others are written by the developer and built into the model. For example, most models have built-in instructions, or pre-prompts, telling them not to suggest anything which could harm people.

Redditor devanxd2000 discovered a set of JSON files in the macOS 15.1 beta which appear to be Apple’s pre-prompt instructions for the Smart Reply feature.

You are a helpful mail assistant which can help identity relevant questions from a given mail and a short reply snippet.

Given a mail and the reply snippet, ask relevant questions which are explicitly asked in the mail. The answer to those questions will be selected by the recipient which will help reduce hallucination in drafting the response.

Please output top questions along with set of possible answers/options for each of those questions. Do not ask questions which are answered by the reply snippet. The questions should be short, no more than 8 words. Give your output in a json format with a list of dictionaries containing question and answers as the keys. If no question is asked in the mail, then output an empty list. Only output valid json content and nothing else.

‘Do not hallucinate’

The Verge found specific instructions, telling the AI not to halluctinate:

Do not hallucinate. Do not make up factual information.

9to5Mac’s Take

The instructions not to halluctinate seem … optimistic! The reason generative AI systems hallucinate (that is, make up fake information) is they have no actual understanding of the content, and therefore no reliable way to know whether their output is true or false.

Apple fully understands that the outputs of its AI systems are going to come under very close scrutiny, so perhaps it’s just a case of “every little helps”? With these prompts, at least the AI system understands the importance of not making up information, even if it may not be in a position to comply.

Image: 9to5Mac composite using an image from Apple

FTC: We use income earning auto affiliate links. More.

3 months ago

28

3 months ago

28

English (US) ·

English (US) ·